Graph Neural Networking Challenge 2021

Creating a Scalable Network Digital Twin

ITU Artificial Intelligence/Machine Learning in 5G Challenge

ITU Artificial Intelligence/Machine Learning in 5G Challenge

ITU invites you to participate in the ITU Artificial Intelligence/Machine Learning in 5G Challenge, a competition which is scheduled to run from now until the end of the year. Participation in the Challenge is free of charge and open to all interested parties from countries that are members of ITU.

Detailed information about this competition can be found on the Challenge website.

BNN-UPC is glad to announce below the “Graph Neural Networking challenge 2021”, which is organized as part of the “ITU Artificial Intelligence/Machine Learning in 5G Challenge.”

This event has finished. Check for new editions at this [link]

Final ranking

| RANK | TEAM | MAPE (%) |

1st | PARANA (2nd in the ITU AI/ML Challenge – 3000 CHF) | 1.267 |

| 2nd | SOFGNN (4th in the ITU AI/ML Challenge – 1000 CHF) | 1.389 |

| 3rd | ZTE AIOps | 1.853 |

| 4th | EricRe | 1.875 |

| 5th | GAIN | 28.739 |

| 6th | LARRENIE | 30.410 |

| 7th | Salzburg Research | 30.412 |

| 8th | GraphNet | 97.174 |

| 9th | Saankhya labs | 236.738 |

| 10th | penguinGNN | 289.116 |

| 11th | OptiMANET | 315.222 |

| 12th | C-DAC(T) | 340.754 |

Award ceremony

Overview

Graph Neural Networks (GNN) have produced groundbreaking applications in many fields where data is fundamentally structured as graphs (e.g., chemistry, physics, biology, recommender systems). In the field of computer networks, this new type of neural networks is being rapidly adopted for a wide variety of use cases [1], particularly for those involving complex graphs (e.g., performance modeling, routing optimization, resource allocation in wireless networks).

The Graph Neural Networking challenge 2021 brings a fundamental limitation of existing GNNs: their lack of generalization capability to larger graphs. In order to achieve production-ready GNN-based solutions, we need models that can be trained in network testbeds of limited size (e.g., at the vendor’s networking lab), and then be directly ready to operate with guarantees in real customer networks, which are often much larger in number of nodes. In this challenge, participants are asked to design GNN-based models that can be trained on small network scenarios (up to 50 nodes), and after that scale successfully to larger networks not seen before, up to 300 nodes. Solutions with better scalability properties will be the winners.

Problem statement

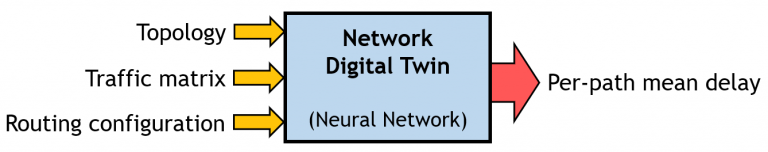

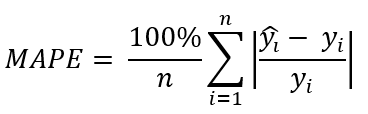

The goal of this challenge is to create a scalable Network Digital Twin based on neural networks, which can accurately estimate QoS performance metrics given a network state snapshot. More in detail, solutions must predict the resulting source-destination mean per-packet delay given: (i) a network topology, (ii) a source-destination traffic matrix, and (iii) a routing configuration (see Figure 1).

Figure 1: Schematic representation of the neural network-based solution targeted in this challenge

Particularly, the objective of this challenge is to achieve a Network Digital twin that can effectively scale to considerably larger networks than those seen during the training phase.

Dataset

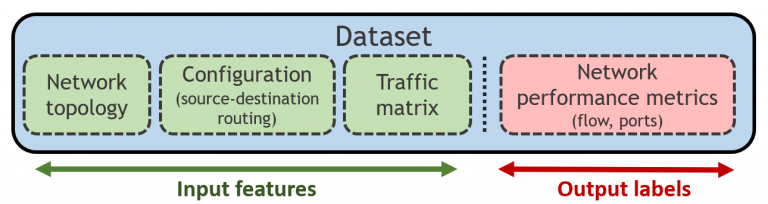

We provide a dataset generated with OMNet++, which is a discrete event packet-level network simulator. The dataset (Fig. 2) contains samples simulated in several topologies and includes hundreds of routing configurations and traffic matrices. Each sample is labeled with network performance metrics obtained by the simulator: per-source-destination performance measurements (mean per-packet delay, jitter and loss), and port statistics (e.g., queue utilization, size). Please, note that this challenge focuses only on the prediction of mean per-packet delay for each each source-destination path.

Data is divided in three different sets for training, validation and test. As the challenge is focused on scalability, the validation dataset contains samples of networks considerably larger (51-300 nodes) than those of the training dataset (25-50 nodes). Likewise, the test dataset will be released at the end of the challenge, just before the evaluation phase starts (see timeline at the botton of this page), and it will contain samples following a similar distribution to those of the validation dataset.

Please, find here a detailed description of the dataset and the download links:

In order to easily read and process the data from our datasets, we provide a Python API [link]. We strongly recommend use this API in order to abstract participants from the complex structure of our datasets.

Evaluation

The objective is to test the scalability properties of proposed solutions. To this end, before the end of the challenge, we will provide a test dataset. This dataset will contain samples with similar distributions to the samples present in the validation dataset. Participants must label this dataset using their neural network models and send the results (i.e., the estimated mean delays on src-dst paths) in TXT format. For the evaluation, we will use the Mean Absolute Percentage Error (MAPE) score computed over the delay predictions produced by candidate solutions:

Solutions with lower MAPE score will be the winners.

During the evaluation, participants will be able to submit automaticallly their solutions in our evaluation platform and see a provisional ranking with all the teams in real time.

Note: The test datset will not include any data related to flow performance measurements (i.e., flow delay, jitter, loss) or port statistics (i.e., utilization, occupancy, loss). It means that any of these features can be used as input of the proposed solutions. For those using the dataset API, they can assume that NO data under the performance_matrix and port_stats data structures can be used as input for the model.

Baseline

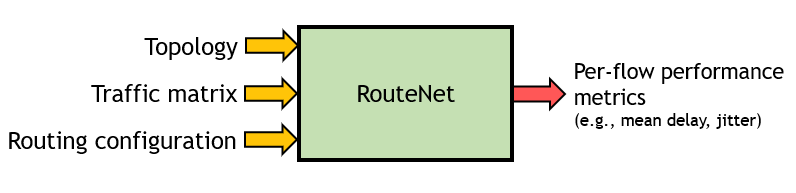

As a baseline, we provide RouteNet [2], a Graph Neural Network (GNN) architecture recently proposed to estimate end-to-end performance metrics in networks (e.g., delay, jitter, loss). Thanks to its GNN architecture, RouteNet revealed an unprecedented ability to make accurate performance predictions even in network scenarios unseen during the training phase, including other network topologies, routing configurations, and traffic matrices (Fig. 3).

Figure 3: Schematic representation of RouteNet

- In this challenge, we extend the problem to modeling performance in networks considerably larger to those seen in the training phase. When RouteNet is trained in small-scale networks, such as those of the training dataset of this challenge, it is not able to produce accurate estimates in much larger networks, as those of the validation and test datasets. As a result, we need a model offering better scalability properties. Nowadays, achieving scalable GNN models is an open problem in the Machine Learning field.

We provide two open source implementations of RouteNet, both including a tutorial on how to execute the code and modify fundamental characteristics of the model:

For those that don’t know IGNNITION yet. This is a high-level framework for fast protoyping of Graph Neural Networks especially designed for applications on data networks. Please, find more information at ignnition.net

Participants are encouraged to update RouteNet or design their own neural network architecture from scratch.

You can find at this [link] a deck of slides introducing the challenge and some tips from the organizers to have a good start.

Rules

IMPORTANT NOTE: It is mandatory filling the registration form to officially participate in this challenge.

https://bnn.upc.edu/challenge/gnnet2021/registration

To participate in this challenge, the following rules must be satisfied:

- Participants can work in teams up to 4 members (i.e., 1-4 members). All the team members should be announced at the beginning (in the registration form) and will be considered to have an equal contribution.

- Proposed solutions must be fundamentally based on neural network models, although they can include pre/post-processing steps to transform the input of the model, or compute the final output.

- The proposed solution cannot use network simulation tools or solutions derived from them.

- Solutions must be trained only with samples included in the training dataset we provide. Data augmentation techniques are allowed as long as they exclusively use data from the training dataset. However, it is not allowed to use additional data obtained from other sources or public datasets.

- During the evaluation process, each team can submit a maximum of 5 solutions (TXT files) per day. In total, teams can make up to 20 evaluation submissions. In case of receiving more submissions, only the first submissions up to these limits will be considered.

- The challenge is open to all participants except members of the organizing team and its associated research group “Barcelona Neural Networking Center-UPC”.

Note: In the challenge, you may use any existing neural network architecture (e.g., the RouteNet implementation we provide). However, it has to be trained from scratch and it must be clearly cited in the description of the solution. In the case of RouteNet, it should be cited as it is in [2].

In case of any personal questions about the rules, please contact the organizers via email to gnnetchallenge <at> bnn.upc.edu .

Final submissions and awards

After the score-based evaluation phase (see “Evaluation” section), a ranking of all the teams will be posted in this website.

Then, top-5 teams must send to the organizers: (i) the code of the neural network solution proposed, (ii) the neural network model already trained, and (iii) a brief document describing the proposed solution and how to reproduce it (1-3 pages). This will be revised to check that the solutions comply with all the rules of the challenge.

Top-3 solutions of this challenge will have access to the Grand Challenge Finale of the ITU AI/ML in 5G Challenge:

- 1st prize: 5,000 CHF

- 2nd prize: 3,000 CHF

- 3rd prize: 2,000 CHF

- And other prizes (e.g., runners up)

Moreover, top-3 teams of this challenge will be recognized in this website and certificates of appreciation will be generated for them.

The organizers are open (and encourage) to publish co-authored papers describing the top solutions proposed. Always respecting the desire of those participants that want to keep their solutions private.

Contact and updates

Subscribe to [Challenge2021 mailing list] for announcements and questions/comments.

Please, note that it is needed to subscribe to the mailing list before sending any email. Otherwise, emails are dropped by default.

Resources

We summarize below the main resources provided for this challenge:

- Summary slides (Link: http://2ja3zj1n4vsz2sq9zh82y3wi-wpengine.netdna-ssl.com/wp-content/uploads/2020/12/GNNet_challenge_2021-2.pdf)

- Round-table slides – Aug. 4th (Link: https://bnn.upc.edu/wp-content/uploads/2021/08/GNNet_challenge_round_table.pdf)

- Registration form (Link: https://bnn.upc.edu/challenge/gnnet2021/registration)

- Training/validation datasets (Link: https://bnn.upc.edu/challenge/gnnet2021/dataset)

- API to easily read the datasets provided (Link: https://github.com/BNN-UPC/datanetAPI/tree/challenge2021)

- Baseline implementation (RouteNet) including a brief tutorial:

- IGNNITION v1.0.2 (Link: https://github.com/BNN-UPC/GNNetworkingChallenge/tree/2021_Routenet_iGNNition)

- TensorFlow v2.4 (Link: https://github.com/BNN-UPC/GNNetworkingChallenge/tree/2021_Routenet_TF)

- Mailing list for questions and comments related to the challenge (Link to subscribe: https://mail.bnn.upc.edu/cgi-bin/mailman/listinfo/challenge2021).

Timeline

Please note that these dates are tentative and may change slightly over the course of the challenge. Stay tuned for further updates via the mailing list and also on this website.

Challenge duration: May 20th-Nov 20th 2021- Open registration: Sep 14th (extended)

- Training and validation datasets release: May 31st

Test dataset release: Sep 15thScore-based evaluation phase: Sep 16th-Sep 30thProvisional ranking of all the teams: Oct 1stTop-5 solutions submit their code and documentation: Oct 15thFinal ranking and official announcement of the top-3 winning teams: Nov 1st

———————————

Top-3 teams will access the Grand Challenge Finale of the ITU AI/ML in 5G challenge:

- Evaluation by the ITU Judges Panel: November 2021

- ITU Awards and Final conference: December 2021 (6-10 December)

Organizers

BNN-UPC

BNN-UPC

BNN-UPC

BNN-UPC

BNN-UPC

BNN-UPC

AGH

ITU Artificial Intelligence/Machine Learning in 5G Challenge

ITU invites you to participate in the ITU Artificial Intelligence/Machine Learning in 5G Challenge, a competition which is scheduled to run from now until the end of the year. Participation in the Challenge is free of charge and open to all interested parties from countries that are members of ITU.

Detailed information about this competition can be found on the Challenge website.

Cite this competition

Plain text (IEEE format):

J. Suárez-Varela, et al., “The Graph Neural Networking challenge: a world-wide competition for education in AI/ML for networks,” ACM SIGCOMM Computer Communication Review, vol. 51, no. 3, pp. 9–16, 2021.

BibTEX:

@article{suarez2021graph,

title={The graph neural networking challenge: a worldwide competition for education in AI/ML for networks},

author={Su{\'a}rez-Varela, Jos{\'e} and others},

journal={ACM SIGCOMM Computer Communication Review},

volume={51},

number={3},

pages={9--16},

year={2021}

} References

[1] List of “Must-read papers on GNN for communication networks”, https://github.com/BNN-UPC/GNNPapersCommNets.

[2] K. Rusek, J. Suárez-Varela, A. Mestres, P. Barlet-Ros, A. Cabellos-Aparicio, “Unveiling the potential of Graph Neural Networks for network modeling and optimization in SDN,” In Proceedings of the ACM Symposium on SDN Research (SOSR), pp. 140-151, 2019. [ACM SOSR 2019] [arXiv]