Graph Neural Networking Challenge 2022

Improving Network Digital Twins through Data-centric AI

This event has finished. Check for new editions at this [link]

ITU AI/ML in 5G Challenge

ITU invites you to participate in the ITU Artificial Intelligence/Machine Learning in 5G Challenge, a competition which is scheduled to run from now until the end of the year. Participation in the Challenge is free of charge and open to all interested parties from countries that are members of ITU.

Detailed information about this competition can be found on the Challenge website.

BNN-UPC is glad to announce the “Graph Neural Networking challenge 2022”, which is organized as part of the “ITU AI/ML in 5G Challenge”.

Final ranking

| RANK | TEAM | MAPE (%) |

| 1st | snowyowl | 8.55334 |

| 2nd | Ghost Ducks | 8.55446 |

| 3rd | net | 9.79016 |

| 4th | iDMG | 10.21327 |

| 5th | SuperDrAI | 11.49168 |

| 6th | NUDTXD | 11.69844 |

| 7th | FNeters | 12.70369 |

| 8th | NetRevolution | 13.12746 |

| 9th | GoGoGo | 15.37968 |

| 10th | chainnet | 18.12810 |

| 11th | Graph-X | 18.66807 |

| 12th | CCI | 21.18417 |

| 13th | BJIP | 37.50674 |

Overview

In recent years, the networking community has produced robust Graph Neural Networks (GNN) that can accurately mimic complex network environments. Modern GNN architectures enable building lightweight and accurate Network Digital Twins that can operate in real time. However, the quality of ML-based models depends on two main components: the model architecture, and the training dataset. In this context, very little research has been done on the impact of training data on the performance of network models.

This edition of the Graph Neural Networking challenge focuses on a fundamental problem of current ML-based solutions applied to networking: how to generate a good dataset. We invert the format of traditional ML competitions, which follow a model-centric approach. Instead, we propose to explore a data-centric approach for building accurate Network Digital Twins.

Problem statement

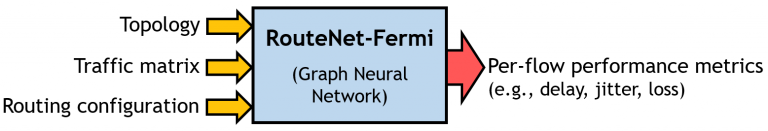

Participants will be given a state-of-the-art GNN model for network performance evaluation (RouteNet-Fermi), and a packet-level network simulator to generate datasets. They will be tasked with producing a training dataset that results in better performance for the target GNN model.

The training dataset should help the GNN model scale effectively to samples of larger networks than those seen during training.

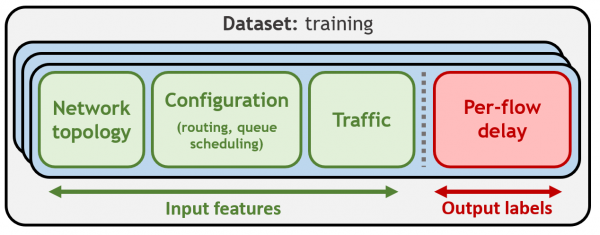

Figure 1: Schematic representation of the training dataset requested in this challenge

GNN model

RouteNet-Fermi is a GNN-based model for network performance evaluation. This model has a network state description as input, and it produces estimates of flow-level performance metrics as output (e.g., delay, jitter, loss). The model supports network scenarios with arbitrary topologies, configurations (routing, queue scheduling), and flow-level traffic descriptions.

In this challenge, we focus on predicting only the per-path mean delay on networks.

We provide an open-source implementation of RouteNet-Fermi in TensorFlow with fixed training parameters. More details about the internal architecture of this model can be found in our technical report [Link].

Network Simulator and Dataset

To generate training datasets we provide an accurate packet-level network simulator based on OMNeT++ (Docker image). Network simulators are computationally very expensive to simulate real networks at scale. Their cost is proportional to the number of packet events in the network. To make the problem tractable, we have scaled it down. Participants will be able to quickly generate their training datasets on commodity hardware. We put the constraint that training datasets must have a maximum of 100 samples, and these samples must be from small networks of up to 10 nodes.

We also provide a validation dataset. Participants can use this dataset to test the performance of RouteNet-Fermi after being trained with their own datasets. This validation dataset contains samples from networks up to 300 nodes, including larger link capacities. A fundamental part of the challenge is to generate samples in small networks (up to 10 nodes) that can help the model generalize to larger networks with higher link capacities. A complete list with all the constraints that the training dataset must satisfy can be found [here].

To test the capability of RouteNet-Fermi to potentially scale to larger networks, we have trained it with a very large dataset with thousands of samples of networks up to 10 nodes. After training, we could validate that the model was able to produce accurate per-path delay estimates on the validation dataset (Mean Relative Error < 5%).

But, is it possible to achieve such level of accuracy with training datasets of limited size (e.g., 100 samples or less)? This remains an open question for the participants of this challenge! We hope it can help generate groundbreaking knowledge on Data-Centric AI for networking.

Resources

All the main resources can be found at the following GitHub repository:

This repository includes three Jupyter Notebooks that should serve as a quick-start tutorial:

- quickstart.ipynb: Step-by-step tutorial with the entire execution pipeline for the challenge (dataset generation & model training).

- input_parameters_glossary.ipynb: It contains information on how to generate datasets with the simulator, what parameters can be modified, and what constitutes a valid dataset.

- dataset_visualization.ipynb: Here there is some code to visualize and analyze the generated datasets.

Below there are other useful resources for the challenge:

- Summary slides [Link].

- Technical report of RouteNet-Fermi [Link].

- Mailing list for questions and comments related to the challenge [Link to subscribe].

Evaluation

The evaluation objective is to test the accuracy of RouteNet-Fermi after being trained with the participants’ training datasets.

At the end of the challenge (Oct 1st-Oct 15th), we will ask participants for their training datasets, and the RouteNet-Fermi trained with those datasets.

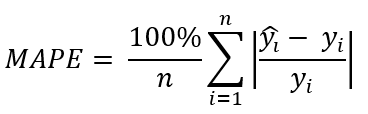

We will make a score-based evaluation on a new test dataset. To this end, we will use the Mean Absolute Percentage Error (MAPE) score computed over the delay predictions produced by trained models:

Solutions with lower MAPE score will be the winners.

NOTE: The training is fixed to 20 epochs and it produces 20 different model checkpoints. Participants can select the best model generated during training (not necessarily the last one) and use it for their submissions.

During the evaluation, participants will be able to submit automatically their solutions in our evaluation platform and will see a provisional ranking with the scores of top teams.

NOTE: Participants will not have access to the test dataset. However, this dataset will contain samples with similar distributions to those of the validation dataset released at the beginning of the challenge.

Final submissions and awards

After the end of the score-based evaluation phase, a provisional ranking of all teams will be posted in this website.

Then, top-5 teams must send to the organizers: (i) a script to generate their training dataset, (ii) the RouteNet-Fermi model already trained with their dataset, and (iii) a brief report describing the solution and how to reproduce it (1-3 pages). This will be revised by the organizers to check that solutions comply with all the rules of the challenge.

—————–

This year we will organize the 1st GNNet workshop, co-located with ACM CoNEXT (December 2022). Top teams will be invited to present their solutions there. Please, find more details about this workshop below:

https://bnn.upc.edu/workshops/gnnet2022

—————–

The winning team of the Graph Neural Networking challenge will receive a cash prize of 1,000 CHF if the Judges Panel from the ITU AI/ML in 5G Challenge determines that the solution satisfies the judging criteria.

Also, top-3 teams will advance to the Grand Challenge Finale of the ITU AI/ML in 5G Challenge:

- 1st prize: 3,000 CHF

- Runner-up: 2,000 CHF

The organizers encourage the publication of papers describing the best solutions. Always respecting the desire of those participants that may want to keep their solutions private.

Rules

IMPORTANT NOTE: It is mandatory filling the registration form to officially participate in this challenge.

Please, note you should register additionally in the portal of the ITU AI/ML in 5G challenge (“Login” and “Create Team”): https://challenge.aiforgood.itu.int/match/matchitem/69

All participants must satisfy the following rules:

- Participants can work in teams up to 4 members (i.e., 1-4 members). All the team members should be announced at the beginning (in the registration form) and will be considered to have an equal contribution.

- Training datasets generated by participants must only include samples generated with the network simulator provided to them (Docker image).

- All the RouteNet-Fermi model checkpoints uploaded to the evaluation platform must be exclusively trained with samples of the training datasets submitted by participants. Also, the training parameters of RouteNet-Fermi must not be modified from those of the original implementation provided to participants (e.g., training epochs, learning rate).

- During the evaluation process, each team can only submit one solution simultaneously. Note that the evaluation platform can take some time to process submissions, as it needs to compute the evaluation score over the test dataset. Also each team can submit a maximum of 5 solutions (i.e., submission files) per day and, in total, teams can make up to 20 submissions during the whole evaluation phase.

- All training datasets must comply with the constraints detailed [here]. Samples from the validation dataset provided to participants cannot be included in the training dataset. After the evaluation phase, top-5 solutions will be reproduced to check that training datasets satisfy all the specified contraints. The Organizing Committee reserves the right to reject solutions that have strong evidence of cheating. For example, if the performance tested in the organizer’s lab does not match the performance of trained models provided by participants.

- The challenge is open to all participants except members of the Organizing Committee, members of the Scientific Advisory Board, and members from the Barcelona Neural Networking Center at UPC.

In case of any doubt about the rules, participants are encouraged to contact the organizers proactively. Please, use the following email: gnnetchallenge bnn.upc.edu .

Contact and updates

Subscribe to [Challenge2022 mailing list] for announcements and questions/comments.

Please, note that it is needed to subscribe to the mailing list before sending any email. Otherwise, emails are dropped by default.

Timeline

Challenge duration: May-Nov 2022Open registration: May 27th-Sep 30th 2022Release of tools and validation dataset: June30th2022Score-based evaluation phase: Oct 1st-Oct 15th 2022. Oct 3rd-Oct 18th 2022Provisional ranking of all the teams: Oct 16th 2022 Oct 19th 2022Top-5 teams submit the dataset, code and documentation: Nov 1st 2022Final ranking and official announcement of top-3 teams: Nov 2022Award ceremony and presentations: Dec 9th 2022

Please note that these dates are tentative and may change slightly over the course of the challenge. Stay tuned for further updates via the mailing list and also on this website.

Organizing Committee

José Suárez-Varela

Telefónica Research

Miquel Ferriol

BNN-UPC

Albert López

BNN-UPC

Jordi Paillissé

BNN-UPC

Carlos Güemes

BNN-UPC

Prof. Albert Cabellos

BNN-UPC

Prof. Pere Barlet-Ros

BNN-UPC

Scientific Advisory Board

Krzysztof Rusek

AGH University of Science and Technology

Fabien Geyer

Technical University of Munich

Bruno Klaus

Federal University of São Paulo

Want to explore the application of GNNs to networks?

Check out IGNNITION

Framework for fast prototyping of GNNs for communication networks

Developed by networking enthusiasts for scientists and engineers of the field.

Build a custom GNN tailored to your networking problem.

Cite this competition

Plain text (IEEE format):

J. Suárez-Varela, et al., “The Graph Neural Networking challenge: a world-wide competition for education in AI/ML for networks,” ACM SIGCOMM Computer Communication Review, vol. 51, no. 3, pp. 9–16, 2021.

BibTEX:

@article{suarez2021graph,

title={The graph neural networking challenge: a worldwide competition for education in AI/ML for networks},

author={Su{\'a}rez-Varela, Jos{\'e} and others},

journal={ACM SIGCOMM Computer Communication Review},

volume={51},

number={3},

pages={9--16},

year={2021}

} References

[1] J. Suárez-Varela, P. Almasan, M. Ferriol-Galmés, K. Rusek, F. Geyer, X. Cheng, X. Shi, S. Xiao, F. Scarselli, A. Cabellos-Aparicio, P. Barlet-Ros, “Graph Neural Networks for Communication Networks: Context, Use Cases and Opportunities”, arXiv:2112.14792, 2021. [paper]

[2] K. Rusek, J. Suárez-Varela, A. Mestres, P. Barlet-Ros, A. Cabellos-Aparicio, “Unveiling the potential of Graph Neural Networks for network modeling and optimization in SDN,” In Proceedings of the ACM Symposium on SDN Research (SOSR), pp. 140-151, 2019. [ACM SOSR 2019] [arXiv]

ITU AI/ML in 5G Challenge

ITU invites you to participate in the ITU Artificial Intelligence/Machine Learning in 5G Challenge, a competition which is scheduled to run from now until the end of the year. Participation in the Challenge is free of charge and open to all interested parties from countries that are members of ITU.

Detailed information about this competition can be found on the Challenge website.